EU Sets Global Standard for AI Regulation

- Date published: 12 December, 2023

- by Cristina Vanberghen

The European Union (EU) has achieved a groundbreaking feat in the realm of artificial intelligence (AI) by emerging as the first continent to establish definitive standards for AI implementation. The genesis of the AI Act traces back to April 2021 when the European Commission unveiled its initial content proposal. Subsequently, collaborative efforts between the Council and the European Parliament aimed to refine and mold the proposed regulations, culminating in a consensus proposal for the act. The AI Act, a priority for the Spanish Presidency, reflects dedicated efforts to conclude negotiations by December.

The rationale behind the EU’s decision to enact specific legislation for AI stems from several factors. Firstly, the swift evolution of AI has sparked concerns regarding potential risks, including discrimination, privacy infringements, and safety issues. Secondly, the EU sought to establish a level playing field for AI companies within the EU, ensuring uniform adherence to regulations. Thirdly, the EU aimed to foster the responsible development and utilization of AI across the European Union.

Delving into the key features of the EU’s Artificial Intelligence Act (AIA), it is crucial to highlight its emphasis on regulating “high-risk” AI systems deployed in sectors prone to significant harm, such as critical infrastructure, education, human resources, and law enforcement. The focus is squarely on cultivating responsible AI practices in these impactful domains.

Deal!#AIAct pic.twitter.com/UwNoqmEHt5

— Thierry Breton (@ThierryBreton) December 8, 2023

Addressing concerns related to the use of AI in critical decision-making processes, the AIA imposes stringent obligations on high-risk AI systems. It underscores the importance of human oversight and transparency, mandating developers and operators to ensure human control over these systems. This prevents them from functioning autonomously in critical decision-making processes. Transparency is a prevailing theme, with the AIA requiring comprehensive technical documentation for high-risk AI systems, encompassing detailed explanations of algorithms, training data, and potential risks. Additionally, the act mandates the implementation of risk management systems to identify, assess, and mitigate potential harms associated with these systems.

An overarching concern is how the AIA protects individuals from potentially misleading AI interactions. The answer lies in an amplified focus on transparency. The AIA mandates that AI systems engaging with humans explicitly inform users of their interaction with a machine. This requirement aims to cultivate informed decision-making and mitigate unintentional reliance on AI systems. Developers and operators are obligated to ensure human control over AI systems, preventing autonomous operation in critical decision-making processes.

The AIA necessitates comprehensive technical documentation for high-risk AI systems, demanding detailed explanations of underlying algorithms, training data, and potential risks. Additionally, the act calls for the implementation of risk management systems to identify, assess, and mitigate potential harms associated with these systems’ operation.

The EU’s AIA represents a comprehensive framework for regulating AI development and deployment, prioritizing responsible AI practices while encouraging innovation in this transformative technology. By focusing on high-risk systems, emphasizing human supervision, and promoting transparency, the AIA aims to harness the power of AI while safeguarding against potential misuse.

One of the key provisions of the AIA is a ban on AI applications that carry biometric categorisation that use sensitive characteristics, such as political, religious, philosophical beliefs, sexual orientation, race.

This ban is designed to prevent the use of AI to discriminate against individuals based on their personal characteristics.

The ban on biometric categorization is poised to exert a significant influence on the development and utilization of AI in various sectors, particularly in the realm of law enforcement. Facial recognition technology, commonly employed by law enforcement to identify suspects and track individuals, may face increased challenges due to the prohibition on biometric categorization. This restriction aims to hinder the potential discriminatory use of technology in law enforcement practices. Similarly, in the realm of employment, where some employers deploy AI to screen job applicants based on facial features, the ban could impede discriminatory practices. Moreover, in the domain of insurance, where AI is utilized to assess risk based on an individual’s facial features, the prohibition on biometric categorization may present obstacles for insurance companies seeking to use this technology in a discriminatory manner.

“The debate underscores the delicate balance between harnessing AI's potential for societal good and preventing its misuse for discriminatory practices.."

Dr. Cristina Vanberghen Tweet

Supporters of the ban contend that it is imperative for safeguarding individuals against discrimination and upholding fundamental rights. They argue that AI, if unchecked, has the potential to magnify existing inequalities, leading to a society where individuals are unjustly evaluated based on their personal characteristics. Conversely, opponents of the ban assert that AI can be leveraged for beneficial purposes, such as identifying criminals and preventing terrorist attacks. The debate underscores the delicate balance between harnessing AI’s potential for societal good and preventing its misuse for discriminatory practices.

There is also a ban on untargeted scraping of facial images from CCTV footage. One pivotal aspect of the AIA is its prohibition on untargeted facial recognition scraping, aiming to curb the use of AI for the indiscriminate collection and storage of extensive facial images from CCTV footage lacking clear purpose or justification. This form of scraping poses a potential threat to privacy, envisioning a scenario where individuals’ privacy is persistently jeopardized in a surveillance society. The ban on untargeted facial recognition scraping carries significant implications for the advancement and application of facial recognition technology, particularly in domains such as crime prevention and public safety. It may introduce challenges for public safety officials seeking to leverage this technology for the protection of the public.

However, it’s crucial to acknowledge certain considerations surrounding this ban. Notably, the prohibition on untargeted facial recognition scraping is not absolute, incorporating narrow exceptions permitting its use for law enforcement purposes. This nuanced approach raises concerns about potential implications for the development of facial recognition technology. The ban, while intended to foster privacy protection, may instigate a chilling effect, deterring companies from investing in research and development due to uncertainties about the legality of their products in the EU.

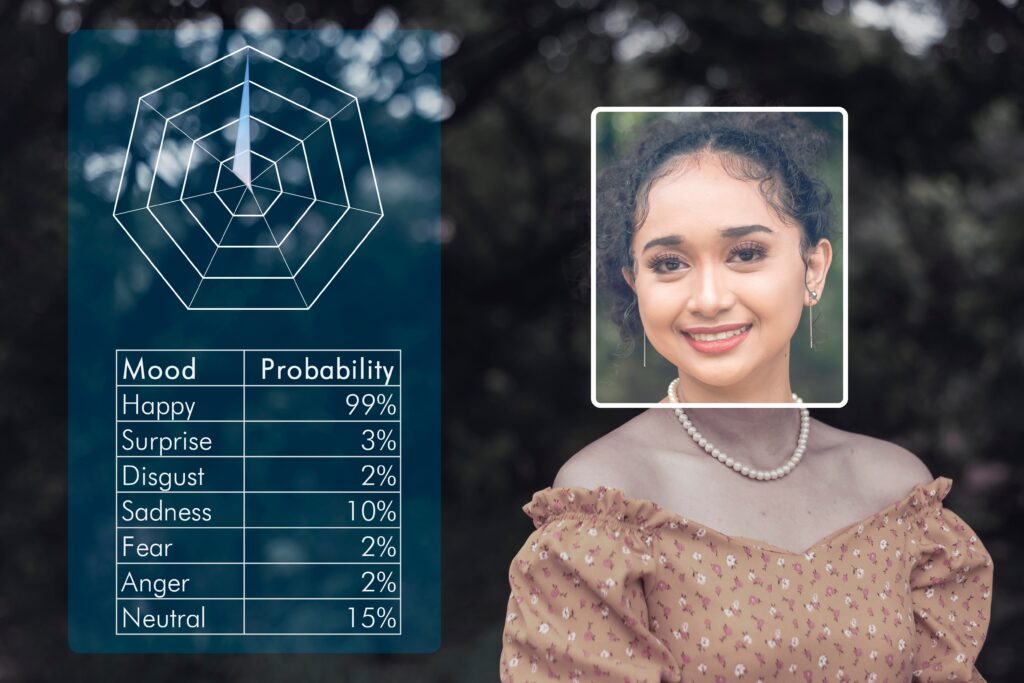

The EU AI Act would ban the use of emotion recognition technology in the workplace and schools.

This prohibition is crafted to safeguard individuals’ privacy, dignity, and respect, ensuring that emotion recognition technology is deployed without discrimination. Emotion recognition technology, a facet of artificial intelligence, delves into analyzing facial expressions, body language, and voice patterns to discern emotions. Despite its capabilities, the utilization of emotion recognition technology harbors various potential risks, notably concerning privacy. This technology has the capacity to amass extensive data on individuals’ emotions, opening the door to discriminatory practices. For instance, employers might leverage emotion recognition technology to identify employees experiencing unhappiness or stress, subsequently influencing decisions related to hiring, promotions, or termination of employment.

It is important to note that the application of emotion recognition technology in the workplace is subject to divergent regulations and ethical considerations across regions, spanning Europe, China, the United States, and India. The disparate landscape underscores the need for comprehensive frameworks and ethical guidelines to govern the ethical and responsible use of emotion recognition technology, fostering a balanced approach that prioritizes individual rights and well-being.

The European Union (EU) adopts a cautious stance marked by stringent regulations regarding emotion recognition in the workplace. Both the EU’s General Data Protection Regulation (GDPR) and the proposed Artificial Intelligence Act (AIA) impose significant limitations on the utilization of emotion recognition technology within professional settings. Emphasizing transparency, data protection, and safeguards against potential discrimination and misuse, these regulations underscore a commitment to ethical and responsible deployment.

In contrast, China adopts a less restrictive approach to emotion recognition in the workplace. The Chinese government’s substantial investments in the development and deployment of emotion recognition technology are accompanied by a scarcity of regulations governing its use. This regulatory gap raises concerns regarding the potential misuse of the technology for surveillance, discrimination, and labor exploitation.

The United States follows a more decentralized regulatory model for emotion recognition in the workplace, with regulations varying across states and localities. While some states have enacted laws restricting the technology’s use, others lack specific regulations altogether. This regulatory patchwork complicates the establishment of consistent standards and safeguards for the technology’s deployment.

In India, the use of emotion recognition in the workplace is still in its nascent stages, accompanied by a paucity of regulations governing its application. The limited awareness and understanding of the technology’s potential risks and limitations pose a risk of misuse, particularly in the absence of adequate safeguards.

The proposed ban on emotion recognition in the workplace and schools carries several potential implications, including a reduction in workplace surveillance. Additionally, schools would no longer have the option to employ emotion recognition technology for monitoring students’ emotions, potentially contributing to a decrease in anxiety and stress levels among students.

#AIAct #trilogue +16h while you were sleeping. And ongoing. pic.twitter.com/vwH7VaKdWf

— Věra Jourová (@VeraJourova) December 7, 2023

The EU AI Act would ban the use of social scoring systems in the EU.

Social scoring, a system utilizing AI to evaluate individuals based on their behavior and social interactions, has been implemented in certain countries, raising concerns about its potential to infringe on human rights and contribute to a surveillance society. The EU AI Act introduces a ban on social scoring with the primary aim of safeguarding individuals’ privacy, dignity, and freedom of expression. This prohibition seeks to prevent the discriminatory use of AI and the exertion of control over individuals’ behavior.

The implications of the ban on social scoring within the EU AI Act are multifaceted. On one hand, it promises a reduction in surveillance, fostering an environment where individuals’ rights are upheld, and AI is deployed responsibly. Governments and companies would be precluded from employing social scoring systems to monitor and manipulate individuals, fostering a more open and democratic society where individuals can freely express themselves without fear of reprisal. Moreover, the ban serves to protect privacy, freedom of expression, and individuals from discrimination based on their social score.

However, it’s essential to note that the ban on social scoring is not absolute. Specific exceptions exist, permitting the use of this technology for well-defined purposes, such as identity verification or the provision of financial services. This nuanced approach ensures a balanced application of AI while mitigating potential abuses and reinforcing responsible use within defined boundaries.

“The EU AI Act would ban the use of social scoring systems in the EU."

Dr. Cristina Vanberghen Tweet

The EU AI Act proposes a pivotal ban on the creation and deployment of AI systems strategically crafted to manipulate or exploit human behavior.

This sweeping prohibition extends to systems designed to influence individuals’ decision-making processes, disregarding their autonomy and free will. It specifically targets systems that exploit vulnerabilities, whether emotional, cognitive biases, or a lack of knowledge, ultimately contributing to the amplification of social and economic inequalities. This bold stance aims to curb the potential harm and exploitation inherent in AI systems, signaling a commitment to responsible and ethical AI development and deployment.Top of Form

For instance, the ban holds the capacity to thwart AI systems from manipulating individuals into making detrimental decisions, such as coercing them into unnecessary purchases or steering them towards risky financial investments. Beyond the direct protection it affords, the ban serves broader purposes, acting as a catalyst for fostering trust and transparency in the development and deployment of AI systems. It signals to the public that AI is being harnessed in a responsible and ethical manner, contributing to a positive perception of AI’s societal role.

Moreover, the ban is poised to stimulate innovation in ethical AI. By establishing clear boundaries, it encourages companies to develop AI systems that prioritize transparency, fairness, and the preservation of human autonomy. This not only aligns with ethical principles but also reinforces a commitment to responsible AI practices, ultimately contributing to the advancement of technology that respects and enhances human well-being.

Facial Recognition and Its Potential Issues

The agreement does not include an unconditional ban on facial recognition. However, it does limit the use of facial recognition in several ways. For example, there is unconditional ban on live facial recognition for real-time tracking of people in public spaces.

Facial recognition technology, a rapidly evolving tool for identifying individuals in images and videos, holds diverse applications ranging from security and law enforcement to marketing. Despite its potential benefits, concerns loom large, especially regarding privacy. This technology, capable of amassing extensive facial data, raises alarms about tracking movements, identifying connections, and even predicting behavior. Discrimination is also a worry, with biases in facial recognition potentially leading to misidentification, particularly affecting minorities or individuals with specific facial features. Lack of transparency exacerbates the issue, as the opaque and secretive nature of facial recognition use makes it challenging for individuals to comprehend how their data is utilized and contest any errors.

The EU AI Act addresses these concerns through stringent restrictions on facial recognition technology. Provisions include a prohibition on real-time tracking in public spaces, a mandate for high accuracy in facial recognition systems, and the requirement for mandatory audits to ensure lawful and ethical usage.

However, this limited ban on facial recognition might prompt an upswing in the adoption of alternative technologies. Companies and organizations could pivot towards options like gait recognition or voice recognition to achieve similar objectives without running afoul of the ban. This shift underscores the dynamic nature of technological advancements and the need for ongoing regulatory vigilance.

The discourse around EU institutions greenlighting dystopian digital surveillance isn’t one I align with. The crucial aspect lies in the meticulous implementation of legislation. For instance, in the EU, the General Data Protection Regulation (GDPR) already governs and safeguards the use of facial recognition technology for law enforcement. It mandates prior judicial authorization and limits the technology’s usage to specific, well-defined purposes. Furthermore, the upcoming AIA will not only prohibit real-time tracking in public spaces but also impose stringent criteria for accuracy and fairness in law enforcement’s use of facial recognition systems.

In contrast, the United States lacks federal-level regulations overseeing the use of facial recognition technology in law enforcement. This absence has led to a patchwork of regulations across different states and localities, resulting in varying degrees of utilization among law enforcement agencies.

Conversely, China showcases extensive use of facial recognition technology for law enforcement, surpassing both Europe and the US. The Chinese government’s heavy investment in this technology has led to widespread deployment in various domains. Facial recognition cameras are omnipresent in public spaces like airports, train stations, and malls, enabling pervasive citizen tracking and identification. China’s ambitions even extend to a social credit system leveraging facial recognition to monitor behavior. While rewarding positive conduct, this system penalizes individuals engaging in negative activities. Moreover, facial recognition is employed to quell dissent by identifying and monitoring individuals participating in protests or political activism.

“In China facial recognition cameras are omnipresent in public spaces like airports, train stations, and malls, enabling pervasive citizen tracking and identification."

Dr. Cristina Vanberghen Tweet

The varying extents and purposes of facial recognition usage in different regions reflect the diversity in regulatory frameworks and societal approaches toward balancing security and privacy.

Facial recognition technology is gaining prominence in India, particularly in bolstering security at national events, border control points, and religious pilgrimage sites. A notable initiative was the Ministry of Home Affairs’ 2019 pilot project during the Kumbh Mela, a massive Hindu pilgrimage, deploying facial recognition to identify individuals with criminal records, missing persons, and suspected ties to terrorism.

Police departments across India are increasingly leveraging facial recognition to enhance crime investigations. In 2020, the Delhi Police introduced a system to analyze CCTV footage, aiding in the apprehension of several suspects.

The regulatory framework governing facial recognition in public spaces in India comprises the Information Technology Act, 2000 (IT Act) and the yet-to-be-enacted Personal Data Protection Bill, 2019 (PDP Bill). The IT Act oversees the collection, storage, and use of personal data, including facial images. The forthcoming PDP Bill proposes more stringent data protection measures, emphasizing the necessity of informed consent for collecting and using personal data.

Addressing privacy concerns, the Indian government is contemplating regulations to govern facial recognition. The PDP Bill introduces robust safeguards for the processing and storage of personal data, including facial images. It also introduces the concept of a data fiduciary, placing heightened responsibilities on organizations handling personal data to ensure privacy and compliance with data protection laws.

Additionally, there are discussions about establishing a regulatory body tasked with overseeing facial recognition technology. This body would issue guidelines, conduct audits, and address complaints related to the technology’s usage.

The landscape of facial recognition in public spaces in India is rapidly evolving, with both government and private sectors adopting the technology while actively addressing associated privacy concerns. The enactment of the PDP Bill and the establishment of a regulatory body are anticipated to play pivotal roles in shaping the responsible and ethical use of facial recognition in India.

As we contemplate potential policy adjustments in light of the evolving global landscape, it becomes imperative to scrutinize the persisting challenges stemming from the existing legal and policy frameworks governing facial recognition technology. The current deployment of facial recognition by law enforcement agencies in Europe without a solid legal foundation raises substantial apprehensions. This unchecked practice not only poses a significant threat to privacy and civil liberties but also opens the door to potential discriminatory uses.

In response to these concerns, European governments are urged to proactively address the unregulated utilization of facial recognition technology. Establishing comprehensive regulations is crucial to ensure its deployment aligns with both legality and ethical standards, safeguarding individual rights and fostering a responsible framework for its application.

According to a report from the independent investigative journalism portal Disclose.ngo, the French police are currently employing facial recognition technology without a legal foundation. Published on the same day as the discussions for the new Artificial Intelligence Act (EUAIAct), the report reveals that the police have been utilizing this technology to identify individuals in public spaces, including protests and demonstrations.

Based on interviews with police officers and access to internal documents, the report highlights that officers are regularly using facial recognition technology despite the absence of a legal framework governing its use. While acknowledging the legal risks involved, the interviewed officers believe that the benefits of employing the technology outweigh the potential drawbacks. Internal documents obtained by Disclose.ngo indicate that the police are cognizant of the technology’s potential for discriminatory use. However, there is no indication of plans to implement safeguards to prevent such misuse.

“In Germany, the Privacy Commissioner's report revealed unauthorized use of facial recognition technology by the police at demonstrations, with inadequate anonymization of collected data and insufficient information provided to individuals."

Dr. Cristina Vanberghen Tweet

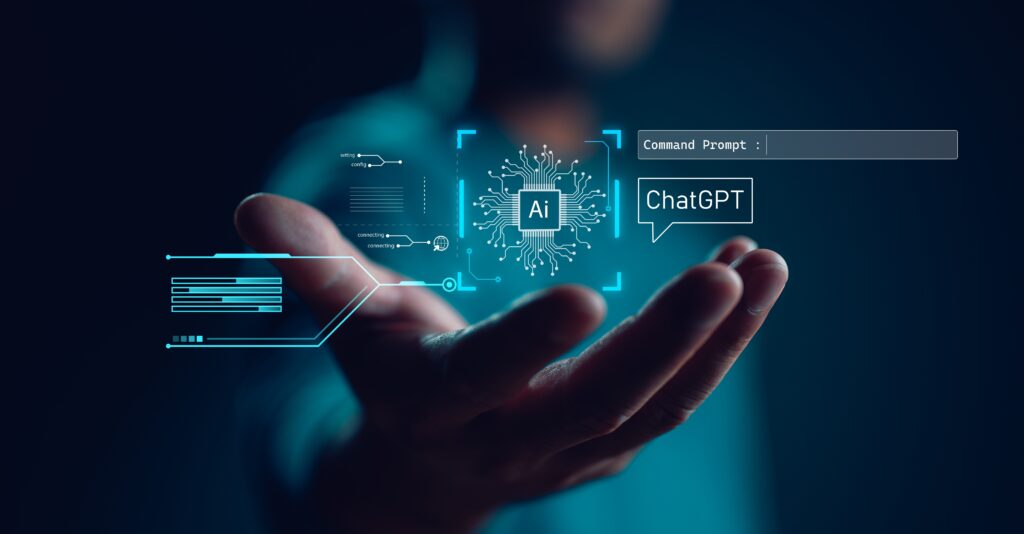

The second topic of AI ACT that took the most time for negotiators was the requirements for general-purpose artificial intelligence systems. General-purpose AI systems are large language models (LLMs) that have been trained on massive amounts of data. These models are capable of generating human-quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way.

Basic requirements for general-purpose AI systems under the AI Act will include transparency, explainaibility, and robustness.

Developers of general-purpose AI systems will need to provide information about how their systems work, including their training data, algorithms, and biases. This information will need to be made available to users and to the public. The AI Act also requires developers to conduct impact assessments of their systems to identify any potential risks. These assessments will need to be made publicly available.

Developers of general-purpose AI systems will also need to be able to explain how their systems make decisions. This means that they will need to be able to provide justifications for the system’s outputs, to identify potential biases, and to discuss how the system could be improved. The AI Act does not specify a particular method for explaining AI systems, but it does require that the explanations be understandable to users and to the public.

Developers of general-purpose AI systems will also need to ensure that their systems are robust to adversarial attacks. This means that the systems should not be easily fooled or manipulated. The AI Act does not specify a particular method for making AI systems robust, but it does require that developers take appropriate measures to protect their systems from adversarial attacks.

The AI Act also includes specific requirements for large language models (LLMs). These requirements are designed to ensure that LLMs are used safely and responsibly. For example, developers of LLMs will need to take measures to prevent the use of LLMs for malicious purposes, such as spreading misinformation or generating hate speech.

Currently, only OpenAI’s GPT-4 model meets the EU’s threshold for a general-purpose AI system. This means that GPT-4 will be subject to the AI Act’s requirements for transparency and explainability. However, other LLMs, such as Google’s LaMDA and Microsoft’s Turing NLG, are also approaching this threshold. It is likely that these models will also be subject to the AI Act in the future.

The AI Act exempts open-source models from the additional transparency requirements. This means that developers of open-source models will not be required to disclose their code or explain their decisions to the public. This exemption was likely requested by Germany and France, which have companies that develop such models.

The exemption for open-source models may have several practical implications. First, it may make it more difficult for researchers and developers to understand how these models work, as they will not have access to the source code; second, it may limit the ability of regulators to assess the safety and reliability of these models; it may make it more difficult for users to understand the limitations of these models.

The AI Act’s requirements for general-purpose AI systems are a step in the right direction. These requirements will help to ensure that these powerful models are used safely and responsibly.

The AI Act establishes a robust enforcement mechanism to ensure compliance with its requirements. Violations of the Act will be subject to significant penalties, including fines of up to €35 million or 7% of global annual turnover for serious infringements, such as the use of high-risk AI systems without prior authorization. For lesser infringements, such as the provision of incorrect information, fines of up to €7.5 million or 1.5% of turnover may be imposed.

Citizens will also have the right to request explanations from the operators of AI systems that have affected their rights. This will provide individuals with greater transparency and accountability regarding the use of AI technologies.

Comprehensive governance structure for AI

To effectively oversee the AI Act’s implementation, the EU has established a comprehensive governance structure. This includes an AI Office: a dedicated AI Office within the European Commission will be responsible for overseeing the most advanced AI models, promoting standards, and enforcing the rules; an independent Scientific Panel of experts will provide guidance on foundation models, including those with significant societal impact; an AI Board: a multi-stakeholder AI Board composed of representatives from EU member states will serve as a coordination platform and provide advice to the Commission; an Advisory Forum will bring together industry representatives, SMEs, start-ups, civil society organizations, and academia to provide technical expertise and insights into the AI landscape.

This well-structured governance framework is designed to facilitate effective regulation, coordination, and guidance, ensuring that EU citizens can harness the benefits of AI while enjoying robust safeguards against potential risks. The diverse perspectives brought forth by the Scientific Panel, AI Board, and Advisory Forum collectively contribute to a comprehensive and informed approach to AI governance within the European Union. It will ensure that the AI Act is effectively implemented and that EU citizens can benefit from the potential of AI while being safeguarded from potential risks.

The text of the agreement will be published, and the Council and the European Parliament have two months to formally adopt it. This means that they must vote on the text and there must be a majority in favor in both institutions. If the text is adopted by the European Parliament and the Council, it becomes law.

Once the text has been adopted, it is published in the Official Journal of the European Union and enters into force two years after its adoption. The regulation will have a significant impact on the development and use of AI in Europe.

The European Commission will be responsible for implementing the AI Act and will be required to provide guidance and support to businesses and organizations that are affected by the regulation. Businesses and organizations will have two years to comply with the AI Act. There will be several different ways for businesses and organizations to comply with the AI Act. These could include training staff, updating software, and conducting impact assessments.

There will be several different penalties for non-compliance with the AI Act. These could include fines, bans on the use of AI systems, and even criminal prosecutions.

The AI Act is a complex piece of legislation, and it is important that businesses and organizations are aware of the requirements and are prepared to comply.

Professor Dr. Cristina Vanberghen

Professor Vanberghen is an academic and political commentator, now based at the European University Institute in Florence, and a senior expert with the European Commission. A French-Romanian national, she is an internationally recognised expert in digitalization, artificial intelligence, consumer policy and cybersecurity. She has been consistently ranked as a “Top EU Influencer” by ZN Consulting.

Our most recent news

Former Estonian Foreign Minister: “We should make Russia understand that the West is not weak anymore”

In our most recent podcast edition of “EU Matters”, the Vice-Chair of the Foreign Affairs Committee of the European Parliament and former Estonian foreign minister, Urmas Paet, talks about the threat of Russia to Europe and the West’s support for Ukraine, as well as the recent developments in the Middle East between Israel and Iran.

Avital Grinberg appointed as General Manager of EU Watch

BRUSSELS – EU Watch announces the decision to appoint Avital Grinberg as the new General Manager, succeeding Michael Thaidigsmann at the helm of the organisation.

Veteran Socialist lawmaker: “We always knew that the EU would come under attack from guys like Putin”

German MEP Udo Bullmann talks about the importance of human rights in EU’s foreign policy, the EU as a player on the world stage and foreign interference in EU politics.

EU’s pivotal steps toward Ukraine, Moldova, and Georgia: A personal perspective on the region’s historic shift

Professor Cristina Vanberghen delivers her opinion on the European Council’s decision to green-light negotiation talks with Ukraine and Moldova.

EU Sets Global Standard for AI Regulation

Professor Cristina Vanberghen delivers her analysis of the EU AI Act, which regulates the use of Artificial Intelligence in the Union.

Enhancing our understanding of the EU’s role in the Middle East

Professor Cristina Vanberghen delivers her analysis on the EU’s role in mediating Middle East conflicts.

Our newsletter

Let's keep in touch!

Subscribe to our newsletter

EU Watch - Website: DIREXION Web Agency

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.